With the rise of Generative AI, 2025 is all set to extend the realm of building intelligent apps to accelerate productivity and efficiency. While LangChain (launched in 2022) became the first popular framework for building LLM-based applications, open-source and product communities have started innovating faster to make building such applications easier.

Agentic AI has emerged as one of the most promising GenAI building blocks in 2025, and a desperate set of frameworks has emerged to build agentic apps. This article summarizes the key agentic frameworks to watch for in 2025, and there is a prediction of consolidation in the future.

Agentic AI will introduce a goal-driven digital workforce that autonomously makes plans and takes actions — an extension of the workforce that doesn’t need vacations or other benefits.

Gartner

#1 – LangChain

High-level Architecture:

Key Features:

- LangChain became a de facto standard for building AI Apps with 1M+ builders with ~100K GitHub Stars.

- Comprehensive vendor integration, cloud-vendor support, third-party libraries integration, diverse vector databases, and many more.

- Wider community knowledge and developer awareness make it the most commonly used framework.

Suitable for (Pros):

- Most applicable for enterprise development with wider adoption as a standard and community-driven support.

- Building the foundational building blocks of the enterprise applications for GenAI—LangChain is best suited for creating enterprise-specific frameworks.

- It is best suitable where compatibility with third-party vendors is required with a forward-looking view of integration with different solutions or products considering the wider adoption of the LangChain framework.

Where other frameworks flare better (Cons):

- Complexity and increased learning cycle with too many integrations and code complexity. For simplicity and specific purposes, other frameworks can be considered as per the context.

- Continuous features/changes require developers to keep the code updated along with the possibility of breaking changes, incompatible libraries, etc.

LangChain has inspired developer community to built similar frameworks in other languages such as LangChain4J for Java, LangChainGo for Golang, and LangChain for C#.

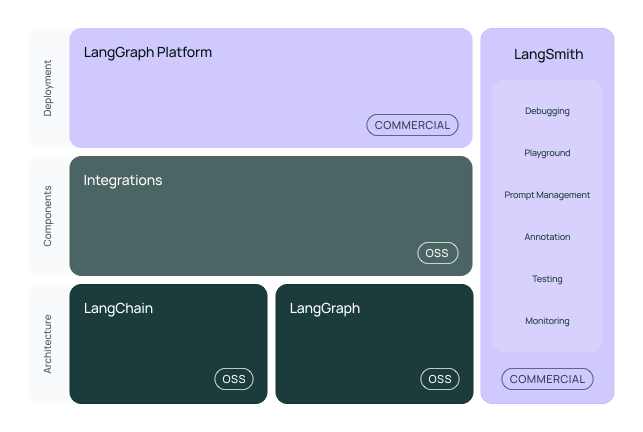

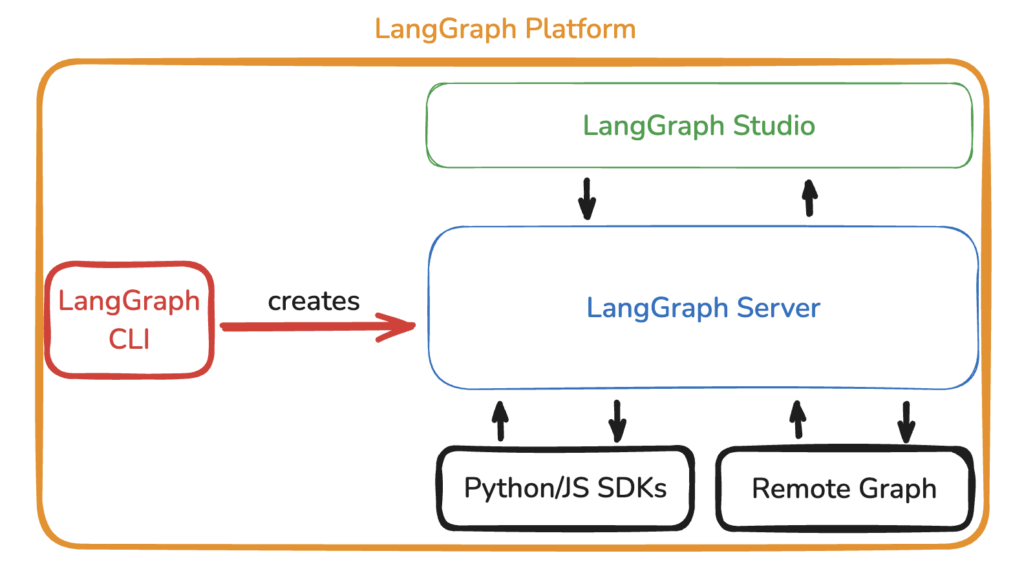

#2 – LangGraph

High-level Architecture:

Key Features:

- LangGraph framework is an open-source framework provided by the LangChain team supporting agentic architecture. LangGraph Platform is a commercial solution for deploying agentic applications to production.

- Stateful design, graph-based workflow, multi-agent capabilities, native integration with LangChain, enhanced observability with LangSmith, IDE support, and wider community support are key features of the platform.

Suitable for (Pros):

- Most applicable for enterprise multi-agent framework development with wider adoption as a standard and community-driven support.

- A wide variety of compatibility is required with a forward-looking view of integration with different solutions or products.

- Best suitable within the ecosystem of LangChain combination avoiding many frameworks in the enterprise.

Where other frameworks flare better (Cons):

- Enterprise-required features such as security, visual development, enhanced observability, etc. are supported in the commercial version.

- Like LangChain, the developers have identified handling dependencies and the framework’s complexity as major obstacles.

#3 – Autogen

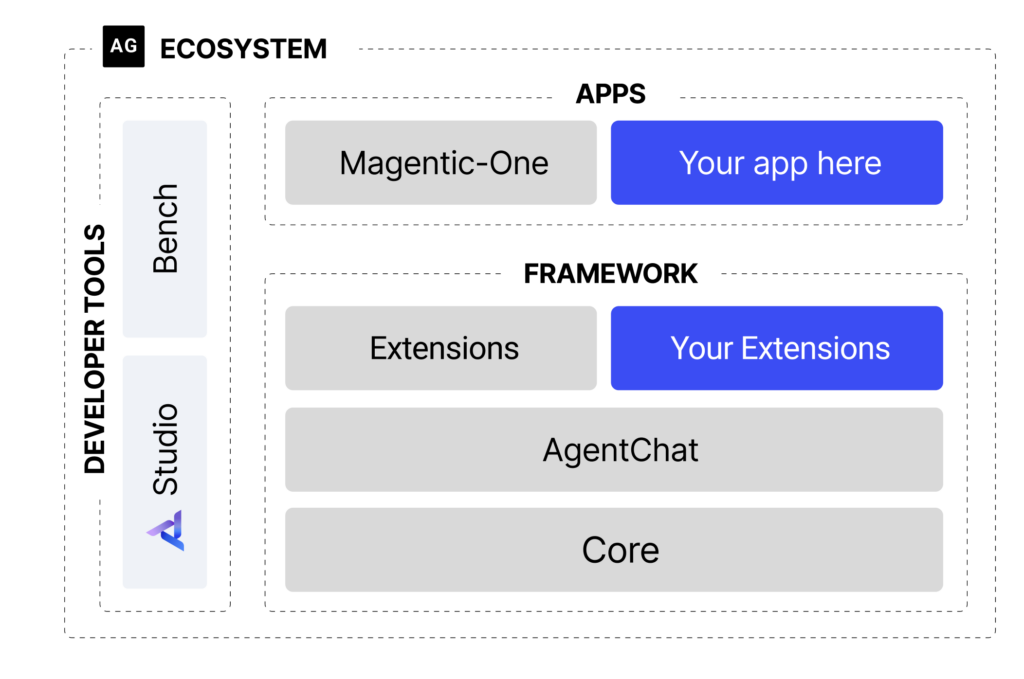

High-level Architecture:

Key Features:

- Autogen is a programming framework, developed by Microsoft, for building Agentic AI agents and applications. It provides a multi-agent conversation framework as a high-level abstraction.

- Asynchronous messaging, modular and extensible, observability and debugging, scalable and distributed, built-in and community extensions, cross-language support, and full-type support are key features of the Autogen framework.

- AutoGen supports enhanced LLM inference APIs, which can be used to improve inference performance and reduce cost.

Suitable for (Pros):

- When alignment with open-source and Microsoft ecosystems is prevalent, Autogen is a suitable solution for building agentic applications and AI agents.

- AutoGen offers an evolving ecosystem to support a wide range of applications from various domains and complexities. It also has an Autogen studio UI application for prototyping and managing agents without writing code.

Where other frameworks flare better (Cons):

- Autogen continues to be experimental (not completely production-ready) and not production-ready. If you are researching complex agent interactions, prototyping new multi-agent systems, or want to experiment with advanced AI agent designs.

- While Autogen is an open-source solution, dependency on Microsoft solutions can be a consideration based on the organizational context. Magentic-One is a commercial solution, built on top of Autogen, offering advanced capabilities such as a high-performing generalist agentic system for enterprises.

- The complexity of setting up Autogen, particularly within an enterprise has been observed as a key concern.

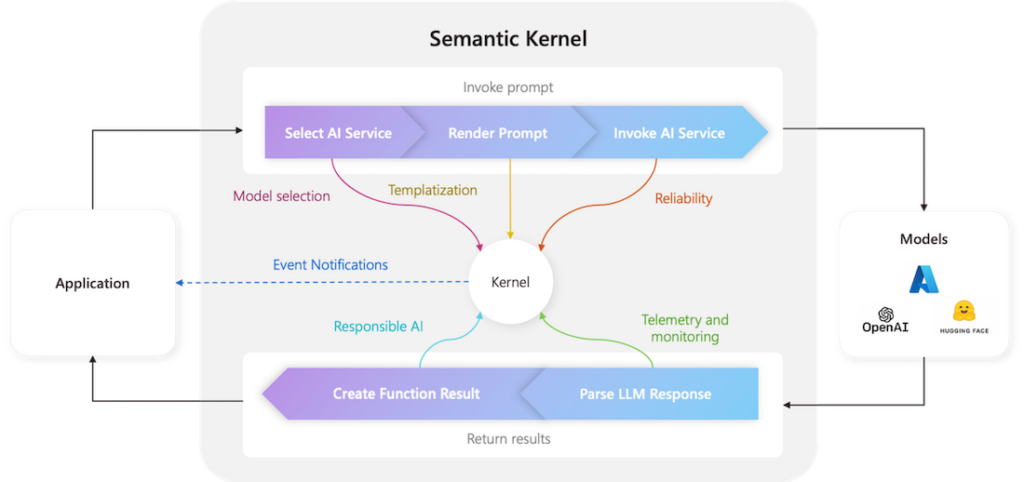

#4 – Semantic Kernel

High-level Architecture:

Key Features:

- Semantic Kernel (by Microsoft) is designed for creating stable, enterprise-ready applications with strong integration capabilities.

- As per Microsoft – Semantic Kernel is a production-ready SDK that integrates large language models (LLMs) and data stores into applications, enabling the creation of product-scale GenAI solutions. Semantic Kernel supports multiple programming languages: C#, Python, and Java. Semantic Kernel has an Agent and Process Frameworks in preview, enabling customers to build single-agent and multi-agent solutions.

- It has inbuilt core frameworks as Agent Framework and Process Framework. The Semantic Kernel Agent Framework provides a platform within the Semantic Kernel ecosystem that allows for the creation of AI agents and the ability to incorporate agentic patterns into any application. The Semantic Kernel Process Framework provides an approach to optimize AI integration with your business processes.

Suitable for (Pros):

- If you need to build a reliable AI agent for a production environment with strong enterprise-level support and integration with existing systems.

- When SDKs are needed in multiple languages as Semantic Kernel supports Python, C#, .Net, and Java.

- Developer training support with enterprise support is available, particularly in the Microsoft Azure environment. Check out the course on using Semantic Kernel with Azure by clicking here.

Where other frameworks flare better (Cons):

- Semantic Kernel falls into the category of SDK whereas the competitive frameworks provide higher-level abstractions and user interfaces for simpler agents.

- Agent framework is still evolving (Agents are currently not available for Java).

- When vendor dependency on Microsoft needs to be avoided, other options can be considered as per the context.

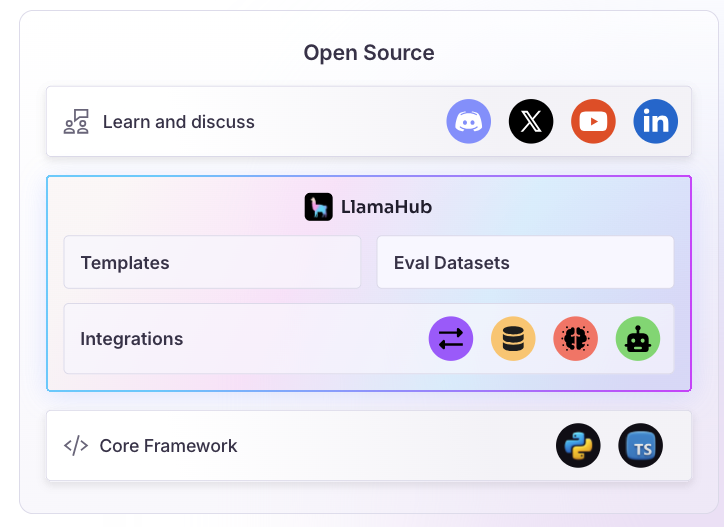

#5 – LlamaIndex

High-level Architecture:

Key Features:

- LlamaIndex started with data framework capabilities for LLM applications and has evolved to cover AI agents, document parsing & indexing, workflow, connectors-based integration, modularity & extensibility, and many more capabilities.

- It offers a SaaS capability as LlamaCloud as a knowledge management hub for AI Agents. LlamaParse is a differentiated offering for transforming instructed data into LLM-optimized formats.

- LlamaHub is a great initiative as a centralized place to explore Agents, LLMs, Vector Stores, Data Loaders, etc.

Suitable for (Pros):

- As an alternative to LangChain, LlamaIndex has evolved as a compelling alternative, particularly for data-intensive LLM applications.

- The ability to parse and index complex documents efficiently with LlamaCloud makes it a compelling option for enterprises seeking quicker time-to-market.

- Building knowledge-intensive AI systems like chatbots and question-answering systems.

Where other frameworks flare better (Cons):

- Primarily focused on data indexing and retrieval, with less emphasis on complex agent behaviors and decision-making. However, the evolution of the framework towards building Agentic apps provides promising capabilities.

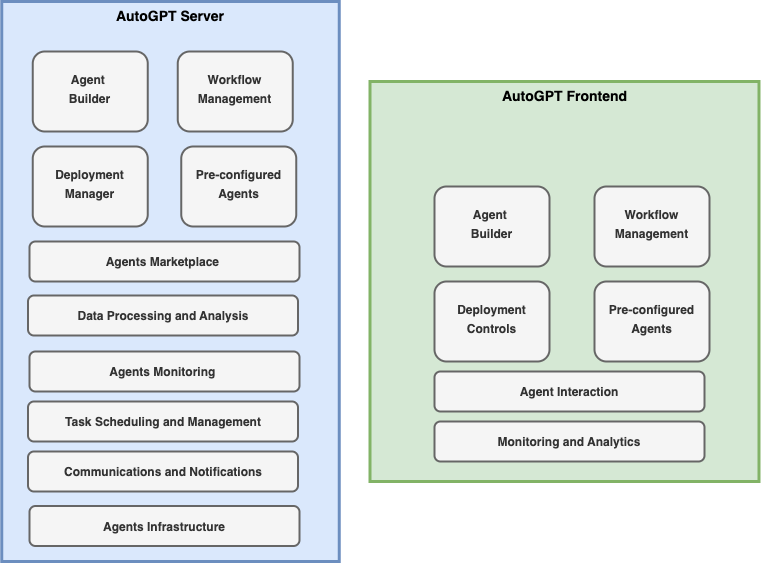

#6 – AutoGPT

High-level Architecture:

Key Features:

- AutoGPT, built by Significant Gravitas, is a powerful platform that allows you to create, deploy, and manage continuous AI agents that automate complex workflows. It was originally built on top of OpenAI’s GPT but extended to support additional LLMs (Anthropic, Groq, Llama).

- Key features: Seamless Integration and Low-Code Workflows, Autonomous Operation and Continuous Agents, Intelligent Automation and Maximum Efficiency, Reliable Performance and Predictable Execution

Suitable for (Pros):

- No code or low-code-centric approach for building agents is preferred with the ability to build the agents in the Cloud (while it offers a self-hosted solution, the setup complexity is higher).

Where other frameworks flare better (Cons):

- Vendor dependency and lock-in to access advanced features can be key concerns for enterprises. The cloud-hosted solution is in the roadmap and currently being offered to waitlist consumers.

- The complexity of licensing support – currently it has dual licensing support while the majority is being offered as an MIT license.

- Additional LLM support such as Google’s Gemini and more will continue to be be a key challenge along with community support.

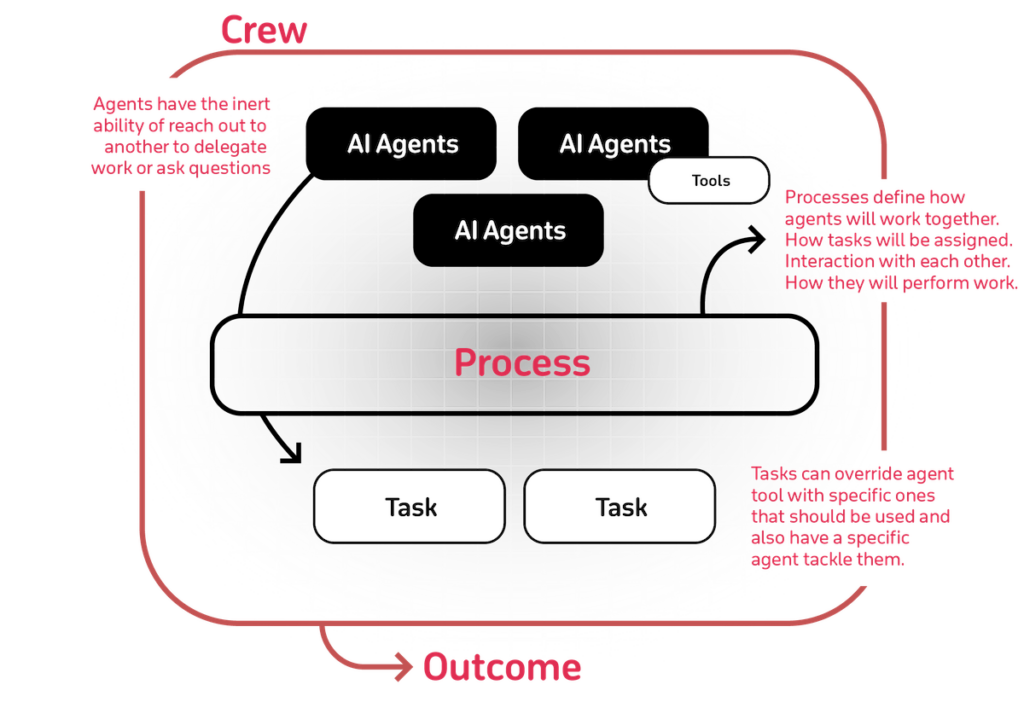

#7 – CrewAI

High-level Architecture:

Key Features:

- CrewAI emerged as a promising multi-agent framework to build and deploy workflow-based applications with support from a wide array of LLMs and Cloud providers.

- Multi-agent collaboration, structured workflow design, user-friendly interface, integration flexibility, and community support are salient features of CrewAI.

Suitable for (Pros):

- CrewAI has emerged as the fastest growing AI agents ecosystem and raised funding of $18M in Oct 2024. The simplicity of creating business-friendly agents has made it easy to understand realizing the value of GenAI quickly.

- Quicker time-to-market with out-of-the-box customization and more suitable for building lightweight agents such as marketing agents.

Where other frameworks flare better (Cons):

- The ability to handle large enterprise-specific complex scenarios with data integration has not been production-tested and will need to be assessed in the future.

- The vendor dependency and lock-in have been a key consideration and the possibility of CrewAI to be acquired by one of the hyperscalers or other players remains an open question.

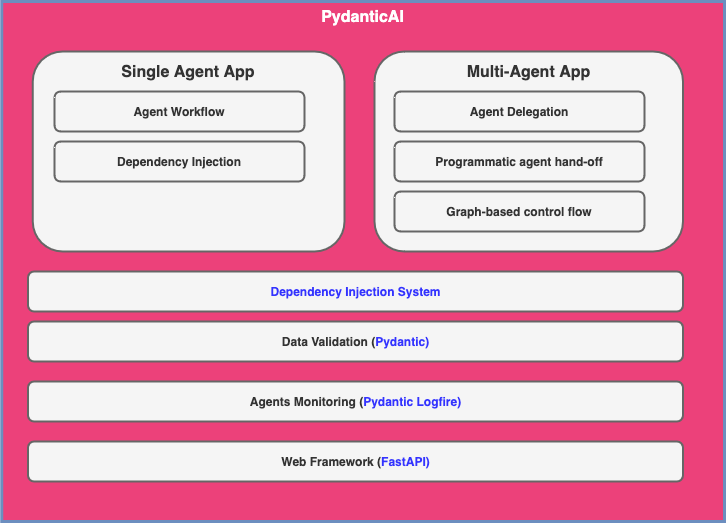

#8 – PydanticAI

High-level Architecture:

Key Features:

- PydanticAI: Built by the Pydantic team to bring that FastAPI feeling to GenAI app development. Integration with Pydantic Logfire for GenAI application’s observability along with the support for multiple LLMs and ecosystem support.

- The salient features are the Pydantic approach, model agnostic implementation, real-time observability, type-safety, graph-support with Pydantic Graph, dependency injection, and simplicity.

Suitable for (Pros):

- Applications leveraging Pydantic and FastAPI approach and looking for simple framework aligned with associated enterprise technology stack.

- It is suitable for simple scenarios as the framework is still evolving.

Where other frameworks flare better (Cons):

- This framework is still in the early stages (beta) and is expected to introduce many changes as it progresses.

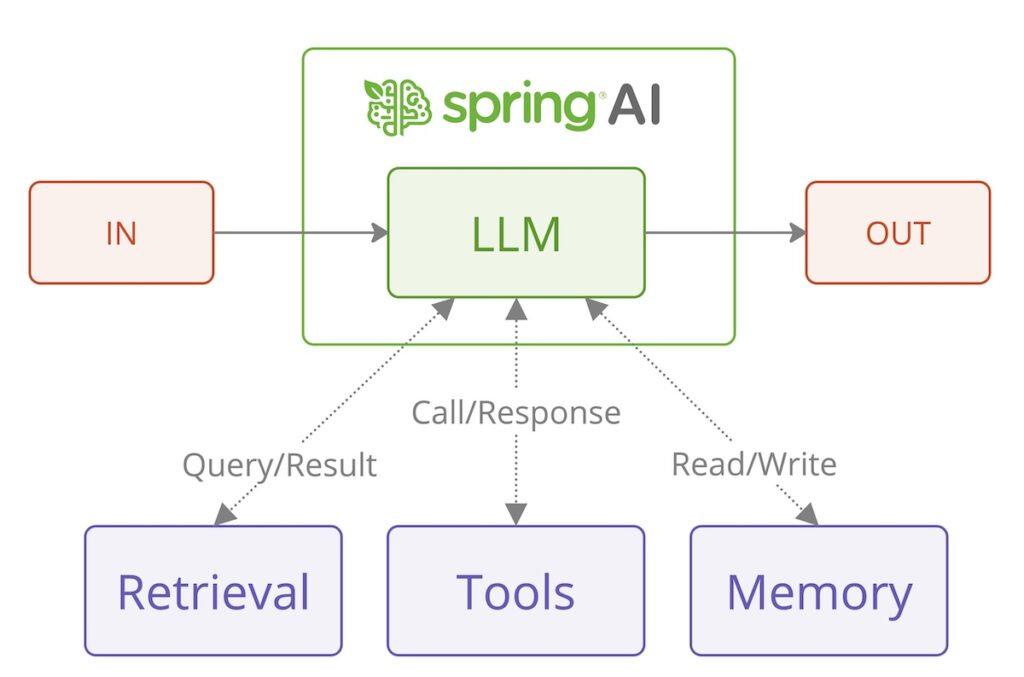

#9 – Spring AI

High-level Architecture:

Key Features:

- Spring AI is inspired by LangChain, Spring AI leverages Spring ecosystems to build GenAI applications in Java ecosystem.

- Key features include support for multiple LLMs, observability features in the Spring ecosystem, model evaluation, Advisors API for encapsulating recurring Generative AI patterns, chat conversations, and RAG.

Suitable for (Pros):

- With enterprise GenAI applications leveraging the Spring ecosystem, it alleviates the need for learning additional frameworks or languages.

- It provides seamless integration with the broader Spring ecosystem to leverage the libraries available for data connectivity, asynchronous processing, system integration, and more.

Where other frameworks flare better (Cons):

- Spring AI is relatively novel and still in the early stages – it needs to be compared with features required in a complex business scenario to consider alternative frameworks.

#10 – Haystack

High-level Architecture:

Key Features:

- Haystack (built by deepset) is an open-source framework or building production-ready LLM applications, RAG pipelines, and complex search applications for enterprises.

- It has been built applying modular architecture principles combining technology from OpenAI, Chroma, Marqo, and other open-source projects, like Hugging Face’s Transformers or Elasticsearch.

Suitable for (Pros):

- It is suitable for building LLM applications for any Cloud with deepsetCloud as an LLM AI platform, which provides in-built LLMOps capabilities.

- A custom RAG pipeline with Jinja templates for components.

- deepset Studio is a free AI application development environment for Haystack, which augments the development lifecycle.

Where other frameworks flare better (Cons):

- Multi-agent capabilities are yet to be battle-tested and the roadmap needs to be reflected to understand the bigger picture.

In addition, there are the following emerging frameworks, which are getting popular providing compelling alternatives as required:

- OpenAI Swarm: An educational framework (not production-ready) exploring ergonomic, lightweight multi-agent orchestration.

- MetaGPT: A multi-agent framework of AI agents, built by researchers (research paper), promotes the idea of meta-programming.

- Flowise: An open-source framework providing drag-and-drop UI to build agents with customized LLM flows.

- Langflow: An open-source framework (acquired by DataStax) to build flow-based GenAI apps interactively.

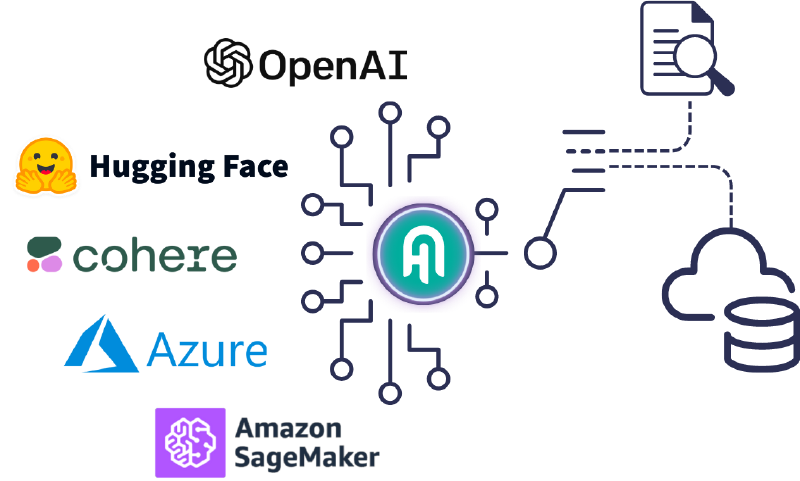

- OpenAGI: A simple framework, built by AI Planet, for building human-like agents.

- Camel-AI.org: An open-source multi-agent framework to build customizable agents, inspired by a research paper CAMEL (Communicative Agents for “Mind” Exploration of Large Language Model Society).

A comparative view of the leading frameworks has been represented below:

| Key Attributes | LangChain | LangGraph | Autogen | Semantic Kernel | LlamaIndex | AutoGPT | CrewAI |

|---|---|---|---|---|---|---|---|

| License | MIT | MIT | MIT | MIT | MIT | MIT | MIT |

| Open-source | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Developed by | LangChain | LangChain | Microsoft | Microsoft | LlamaIndex | Significant Gravitas | CrewAI |

| GitHub Stars (as on Jan ’25) | 98K | 8K | 38K | 38K | 171K | 25K | |

| Used By (GH Public Repos) | 170K | 10K | 3K | 16K | N/A | 7K | |

| Language | Python, TypeScript | Python, TypeScript | Python, C# | Python, C#, Java | Python, TypeScript | Python | Python |

| Enterprise Support | Yes | Yes | Yes | Yes | Yes | Yes | |

| Deployment Model | Self-hosted | SaaS & Self-hosted | Self-hosted | SaaS & Self-hosted | SaaS & Self-hosted | SaaS & Self-hosted |

To conclude, while each framework offers competitive capabilities, most are evolving, considering the pace of innovation in the Generative AI landscape. The applicability of these frameworks and the framework selection depend on various factors, such as enterprise context, application and business requirements, security, performance, and miscellaneous non-functional requirements.

Feel free to share your feedback and experience in the comments!

Disclaimer:

All data and information provided on this blog are for informational purposes only. The author makes no representations as to the accuracy, completeness, correctness, suitability, or validity of any information on this blog and will not be liable for any errors, omissions, or delays in this information or any losses, injuries, or damages arising from its display or use. This is a personal view and the opinions expressed here represent my own and not those of my employer or any other organization. The interpretation of report is based on the author’s determination based on the assessment of the content – there might be differences of opinion, which can’t be attributed back to the author.

Related Posts